![]() The Learning Analytics Enriched Rubric (LAe-R) is an advanced grading method used for criteria-based assessment. As a rubric, it consists of a set of criteria. For each criterion, several descriptive levels are provided. A numerical grade is assigned to each of these levels.

The Learning Analytics Enriched Rubric (LAe-R) is an advanced grading method used for criteria-based assessment. As a rubric, it consists of a set of criteria. For each criterion, several descriptive levels are provided. A numerical grade is assigned to each of these levels.

An enriched rubric contains some criteria and related grading levels that are associated to data from the analysis of learners’ interaction and learning behavior in an LMS course, such as a number of post messages, times of accessing learning material, assignments grades and so on.

Using learning analytics from log data that concern collaborative interactions, past grading performance and inquiries of course resources, the LA e-Rubric can automatically calculate the score of the various levels per criterion. The total rubric score is calculated as a sum of the scores per each criterion.

Contents

2 Creating a new Learning Analytics Enriched Rubric

2.1 Selecting a Learning Analytics Enriched Rubric

2.2 Editing a Learning Analytics Enriched Rubric

2.3 Adding or editing criteria in a Learning Analytics Enriched Rubric

2.4 Checking options for a Learning Analytics Enriched Rubric

2.4.2 Enriched criteria options

2.5 Saving and Previewing a Learning Analytics Enriched Rubric

3 Using a Learning Analytics Enriched Rubric to evaluate users

3.1 Handling enrichment evaluation failure

3.2 Evaluation according to user

3.3 Evaluation according to global scope

4 How users view the Learning Analytics Enriched Rubric

4.1 Preview of a Learning Analytics Enriched Rubric

4.2 View grading results produced by a Learning Analytics Enriched Rubric

5 Backup & restore, template sharing and importing a Learning Analytics Enriched Rubric

6 Grade calculation and Data mining for enrichment in a Learning Analytics Enriched Rubric

6.2 Learning Analytics for enriching the grading method

7 General advices – instructions

Version 2.0 release notes

The new version of LAe-R plugin embeds the following enhancements and characteristics:

- Learning Analytics (LA) are produced according to LMS’s new logging system. The plugin automatically detects the system’s default log store and retrieves the corresponding log data accordingly. Both Standard and Legacy log stores can be used, but not an External log store.

- Up-to-date plugin coding according to the latest code guidelines for advanced grading methods, even web services support.

- Improved rubric layout for editing and viewing.

- Improved layout of warnings and errors.

- Improved layout of criterion evaluation reports for graders and users.

Creating a new Learning Analytics Enriched Rubric

Selecting a Learning Analytics Enriched Rubric

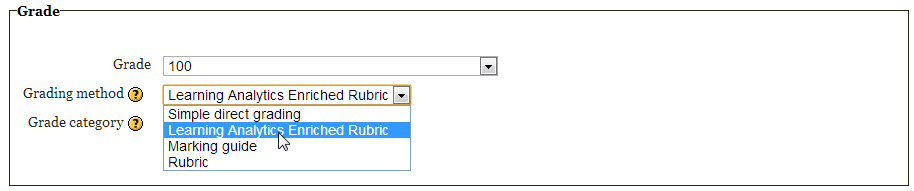

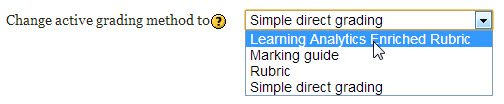

There are two ways a user can choose an LA e-Rubric as an advanced grading method.

- Make the selection during the creation of an assignment, in the Grade section of the creation form.

- Click Advanced grading in the settings block of the assignment and then make the selection from the Change active grading method to select form field.

Editing a Learning Analytics Enriched Rubric

LA e-Rubric editor

In the Advanced grading page of the assignment, the user can

- Define a new grading form from scratch or,

- Create a new grading form from a template or,

- Edit a current form definition

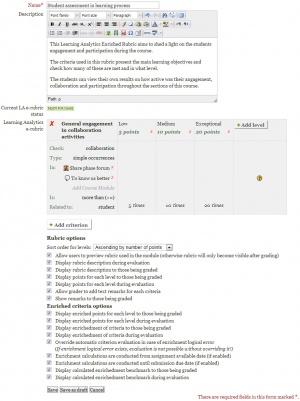

Either way, the grading form editor page appears where the LA e-Rubric can be created or edited.

In that form, the user provides a name for the LA e-Rubric, an optional description, adds or edits the criteria and chooses the options meeting his requirements.

Then the LA e-Rubric can be saved as a draft (for further editing), or saved and made ready for use.

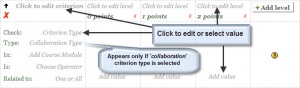

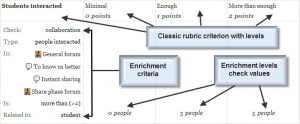

Adding or editing criteria in a Learning Analytics Enriched Rubric

LA e-Rubric add or edit criterion

LA e-Rubric enrichment of criteria

In order to add or edit a criterion, the user can:

- Add or edit the criterion description.

- Add or edit the level description and points values.

- Add or edit the enrichment criterion type (collaboration-grade-study).

- Add or edit the enrichment collaboration type (simple occurrences, file submissions, forum replies, people interacted), in case of collaboration is chosen as the criterion type.

- Add or delete the corresponding course modules according to criterion type, from which data mining is conducted.

- Add or edit the operator used for enrichment calculations between the enrichment benchmark found and level enrichment check values (equal-more than). This defines if discrete or continuous range values are checked for comparison operations.

- Add or edit the checking scope of calculations according to one user or all (student-students).

- Add or edit the level enrichment check values needed for setting the checkpoints in comparison operations.

Before adding or editing the above form fields the user should consider the following:

- A criterion type must be selected first in order for all other enrichment fields to be edited.

- All enrichment criteria and level values must be edited in case of enrichment.

- To keep a rubric criterion simple, leave enrichment fields blank. Criteria enrichment is not mandatory!

- The criterion type defines the kind of course modules that will be included in the enrichment.

- In case of collaboration check, the collaboration type field is available and mandatory.

- The collaboration type defines what kind of checking will be made from the course modules.

- Collaboration type posts & talks check simple add post and talk instances from logs in the selected course modules.

- Collaboration type file submissions check the number of files uploaded ONLY in selected forum course modules.

- Collaboration type forum replies checks user(s) replies to posts ONLY in selected forum course modules.

- Collaboration type people interacted checks the number of classmates a user has interacted with, in the selected course modules.

- One or more course modules of the selected criterion type and of the particular course should be added.

- The criterion operator is used for calculating logical conditions associated with the levels enrichment values.

- Related to All (users) or One user) defines whether the calculations will be according to the user evaluated, thus absolute values will be processed or according to all other users, thus percentages will be processed.

- If the relation of the criterion is according to the percentage, the arithmetic mean of all other users from all selected course modules will be considered as the users’ benchmark.

- Level enrichment values should be ascending (or descending) according to level ordering, otherwise logical errors may occur during evaluation.

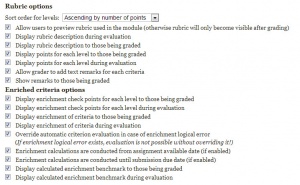

Checking options for a Learning Analytics Enriched Rubric

The following options can be checked while editing an LA e-Rubric:

Rubric options

LA e-Rubric options

- Sort order for levels

Sort level viewing according to grade points ascending or descending.

Important: the ordering of levels is taken into account in enrichment in order to pick the appropriate level according to enrichment checkpoints. For example, if level grade values are 0 – 10 – 20 – 30, enrichment checkpoints should be ascending accordingly, for instance, 5 – 6 – 7 – 8. Using this example, if the enrichment operator is more than (>=), the enrichment benchmark is calculated to 9 and the enrichment checkpoints are 5 – 6 – 8 – 7, then 7 will be picked as opposed to 8! - Allow users to preview rubric used in the module (otherwise rubric will only become visible after grading)

Checking this option provides the user the ability to preview the LA e-Rubric before the user submits his assignment or been graded. - Display rubric description during the evaluation

- Display rubric description to those being graded

- Display points for each level to those being graded

- Display points for each level during an evaluation

- Allow grader to add text remarks for each criterion

- Show remarks to those being graded

Enriched criteria options

- Display enrichment checkpoints for each level to those being graded

- Display enrichment checkpoints for each level during an evaluation

- Display enrichment of criteria to those being graded

Un-check this option to hide enrichment of rubric criteria. - Display enrichment of criteria during an evaluation

Un-check this option to hide enrichment of rubric criteria. - Override automatic criterion evaluation in case of enrichment logical error (If enrichment logical error exists, evaluation is not possible without overriding it!)

Check this option to enable the evaluator to pick a level according to his own judgment in case an enrichment benchmark is not found or there is a logical error in the enrichment criteria and a level can’t be automatically picked. - Enrichment calculations are conducted from assignment available date (if enabled)

If an availability date is defined for the assignment, check this option to timestamp enrichment calculations on data mining. - Enrichment calculations are conducted until submission due date (if enabled)

If a due date is defined for the assignment, check this option to timestamp enrichment calculations on data mining. - Display calculated enrichment benchmark to those being graded

- Display calculated enrichment benchmark during an evaluation

Saving and Previewing a Learning Analytics Enriched Rubric

The user can save this form as a draft for further checking or save and make it ready to be used immediately. Either way, afterward the user can preview the LA e-Rubric form as is was created or edited.

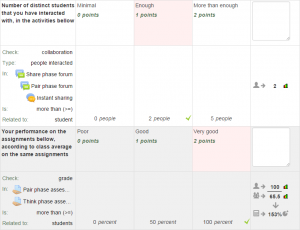

Using a Learning Analytics Enriched Rubric to evaluate users

LA e-Rubric evaluation editor

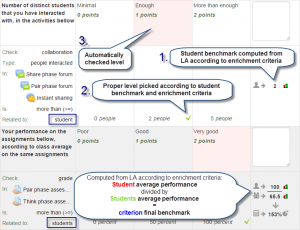

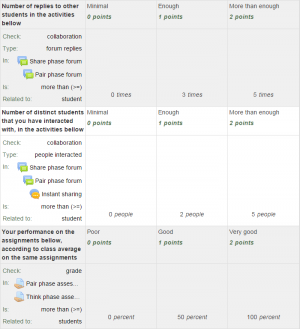

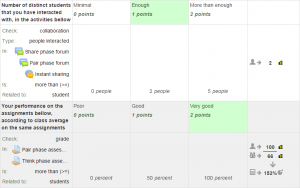

LA e-Rubric evaluation explained

The grading process is where the Learning Analytics Enriched Rubric performs its magic. Analysis of data from log files is performed in order that all enriched criteria can automatically be evaluated and the corresponding criterion level gets a value. The evaluator can provide optional remarks, and just click ‘save’ or ‘save and grade next’, in order to grade a user.

First, the user clicks on View/grade all submissions in the assignment view page, or in the assignment’s settings box. In the grading page of the assignment, the user clicks on the grade icon or chooses Grade from the editing icon in the edit column on the left.

Inside the evaluation form, the user sees all enriched criteria with the enrichment benchmark displayed and the appropriate level chosen for each one. If the enrichment evaluation procedure succeeded, in each criterion the user can see the checking icon of the enriched level whose value corresponds to the benchmark according to enrichment.

Handling enrichment evaluation failure

If the enrichment evaluation failed for an enriched criterion, the evaluator can pick a level according to his own judgment ONLY IF Override automatic criterion evaluation is enabled from the LA e-Rubric options. If there is a failure on enrichment evaluation and the evaluator can’t pick a level himself, user evaluation won’t be possible because all criteria will not have a level checked. In such cases, it is strongly recommended to check the enrichment criteria again to avoid these errors, rather than override the enrichment evaluation procedure.

Evaluation according to user

If criterion enrichment evaluation is conducted according to user values, the user’s benchmark appears upon succession in order for the evaluator to get the exact view of user performance.

Evaluation according to global scope

If the enrichment evaluation is conducted according to all users participating, then upon successful findings, the evaluator views two benchmarks. One that represents the user currently evaluated, and another for the score of all the participating users’ average score. Again this is done in order for the evaluator to gain a better scope of user performance in reference to all participating users (including him).

Something very important about global scope evaluation is that only users actively participating in the selected course modules of the enrichment are accounted for, which means that they may be, less than all users enrolled in a course. This is done for 2 reasons:

- Because the LA e-Rubric performs the qualitative evaluation to users according to those participating, not to all. We want to measure true participation and collaboration results that concern active users only.

- Another equally important reason could be explained with an example: Let’s say that we have 20 users attending a course and we want to evaluate them according to how much they collaborated the past week. Let’s also say that 5 of them were sick the past week, or could not attend. It wouldn’t be right for 5 users missing to bring down the whole week’s average.

The above estimates are effective only for checking collaboration! For checking grades and studying, all enrolled users are accounted for in the process.

How users view the Learning Analytics Enriched Rubric

Preview of a Learning Analytics Enriched Rubric

LA e-Rubric preview

If the corresponding option is engaged, users can preview the LA e-Rubric before they are graded. This is an excellent method to let users know how they are evaluated and get a better view of their evaluation criteria.

In order for the users to preview the LA e-Rubric, they just click Submissions grading in the submenu of their assignment on the left.

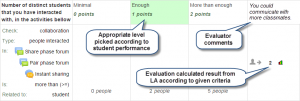

View grading results produced by a Learning Analytics Enriched Rubric

LA e-Rubric view evaluation results

LA e-Rubric evaluation results explained

After grading, users can view how their evaluation occurred and they can also view their own benchmarks according to the LA e-Rubric criteria that affected their evaluation outcome.

The LA e-Rubric elements displayed to users are defined in the LA e-Rubric options.

Users view their completed LA e-Rubric when the visit their corresponding assignment page.

Backup & restore, template sharing and importing a Learning Analytics Enriched Rubric

Procedures concerning backup, restore, import or template sharing are carried out according to all advanced grading methods of LMS.

However, regarding the LA e-Rubric, there are some restrictions.

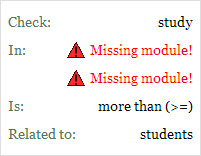

The LA e-Rubric uses specific and resident course modules belonging to the lms course in which the assignment is created. Thus when an LA e-Rubric is restored or imported or shared in another course, the particular course modules won’t exist. The structure of the entire LA e-Rubric stays intact, but the user has to replace the missing course modules with similar ones obtained by the new course.

When an entire course is restored, the expected scenario is that most course modules have been given a new id, thus this restriction may still be in effect. Again, the structure of the entire LA e-Rubric stays intact, but the user has to replace the missing course modules with the same ones obtained by the restored course, in order to update the course modules ids.

During the sharing procedure of an LA e-Rubric, the user gets an information message concerning this restriction.

If the user imports or restores or uses an LA e-Rubric from another course, another message appears informing the user about the course modules missing from the enriched criteria and advises him to make the appropriate changes so that the LA e-Rubric may be operational.

Images below display all these messages.

|

LA e-Rubric missing course modules error |

LA e-Rubric form sharing warning |

LA e-Rubric view with errors warning |

LA e-Rubric edit with errors warning |

Grade calculation and Data mining for enrichment in a Learning Analytics Enriched Rubric

Grade calculation

Grade calculation is done the same way as in simple rubrics. For more information check Grade calculation.

Learning Analytics for enriching the grading method

The data acquired during the log file analysis are distinguished according to analysis indicators as presented in these cases below.

- For simple occurrences in collaboration, lms log data concern forum add posts and chat talks.

- For file submissions in collaboration, the number of files attached to forum post messages.

- For forum replies in collaboration, forum reply post messages are counted (not including the replies one has made to himself).

- For people interacted, forum post and chat messages data are measured.

- For checking study behavior, the number of users’ views upon selected course recourses are taken into account.

- For checking grades, lms grading scores on selected assignments are processed.

General advices – instructions

- First, create all course resources and activities and then generate an LA e-Rubric.

- Create enrichment criteria carefully and thoroughly to avoid logical errors.

- Don’t delete course resources or activities used in a LA e-Rubric.

- Log data are needed for evaluation so don’t purge or empty lms data logs.

Future improvements

Future improvements may be done in order to:

- Visualize Learning Analytics with graphs and charts during evaluation for each criterion.

- Export evaluation outcomes to various formats.

- Import LA e-Rubrics from known rubric creation tools.

- Provide default rubric templates for faster rubric creation.

See also

LMS Docs

- Advanced grading methods regarding general concepts about advanced grading in LMS

- LMS Rubrics regarding guidelines about using rubrics in an LMS course